Hi, I'm Mohamad Zamini.

A

Self-driven and passionate about advancing machine learning, I am focused on pushing the boundaries of Large Language Models (LLMs) through innovative research and development. As a final-year PhD Candidate with hands-on experience in optimizing LLMs during my recent internship, I am committed to solving complex real-world problems with cutting-edge AI technology. My ambition is to contribute to the future of AI by developing scalable and efficient models that can transform industries and enhance human-computer interaction.

About

I am a PhD candidate in Computer Science with a focus on optimizing Multimodal Large Language Models (MLLMs) to enhance their reasoning capabilities. My work involves accelerating LLMs through advanced techniques such as pruning, ensuring performance is maintained or improved. I have hands-on experience with foundational models, having previously interned at Numenta Inc., and I am currently developing innovative approaches like Mixture of Depth (MoD), Mixture of Experts (MoE) for resamplers, and attention pruning to push the boundaries of MLLM efficiency and scalability.

- Programming Languages: Python, C++

- Databases: MySQL, MongoDB, PostgreSQL

- Libraries & Frameworks: PyTorch, TensorFlow, Hugging Face Transformers, Keras, NumPy, Pandas, OpenCV

- Model Optimization & Deployment: ONNX, TensorRT, TorchServe, FastAPI

- Tools & Platforms: Git, Docker, Kubernetes, AWS, GCP, Azure, JIRA, Weights & Biases (wandb)

Seeking a challenging position that leverages my expertise in Machine Learning and Software Engineering, offering opportunities for professional development, innovative experiences, and personal growth.

Experience

- Developed an LLM analytics agent to uncover insights from large-scale telemetry data, enabling natural-language querying and multi-turn follow-ups over structured session logs.

- Tools: Python, PyTorch

- Fine-tuned LLM models, including Mistral, LLaMA, and GPT, leveraging techniques such as activation sparsity and attention sparsity to optimize performance.

- Applied techniques such as KWTA, dynamic context pruning, and KV caching to enhance model efficiency.

- Tools: Python, PyTorch, Accelerate, GPT, llama

- During my internship at Petrolern as a Digital Innovation Intern, I gained experience in both machine learning and data compression techniques

- I developed a semantic compression technique using a deep autoencoder to effectively map data tuples into a lower-dimensional representation

- As a machine learning engineer, I built models for analyzing geothermal data and improved their performance through algorithmic optimization

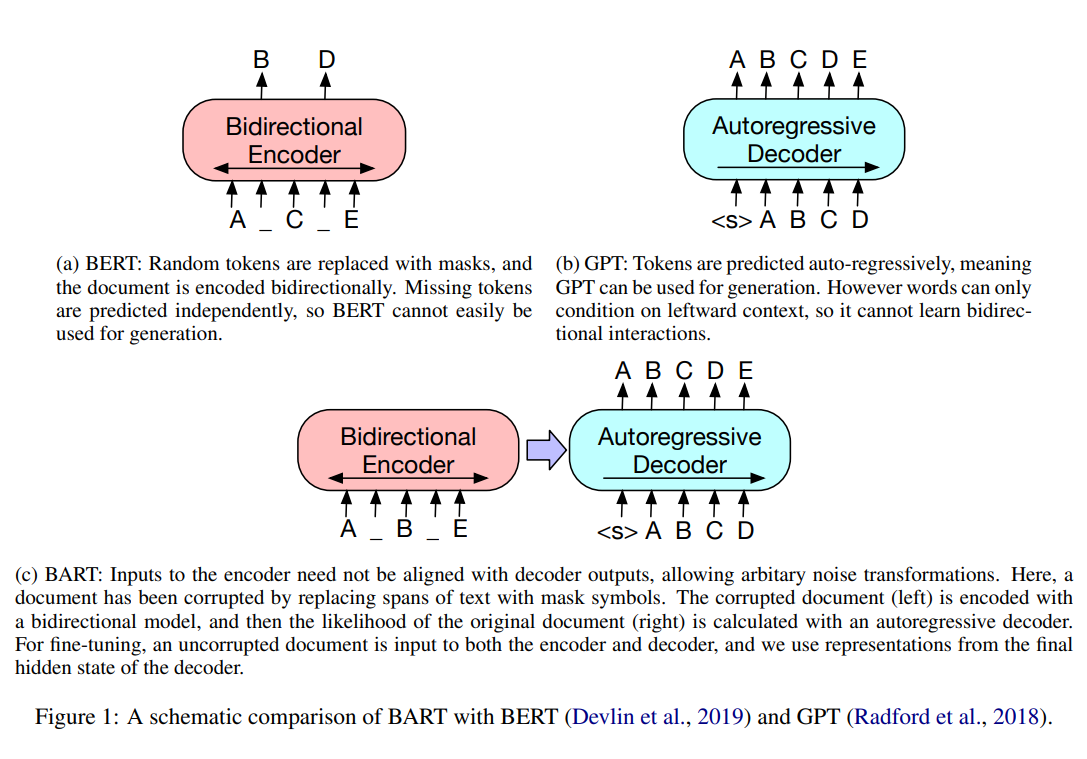

- Fine-tuned models like BART for summarization on Persian text data.

- Implemented Matrix Factorization for topic modeling.

- utilized BiLSTM-CRF Models for sequential tagging.

- Tools: Python, Scikit-learn, NLTK

Projects

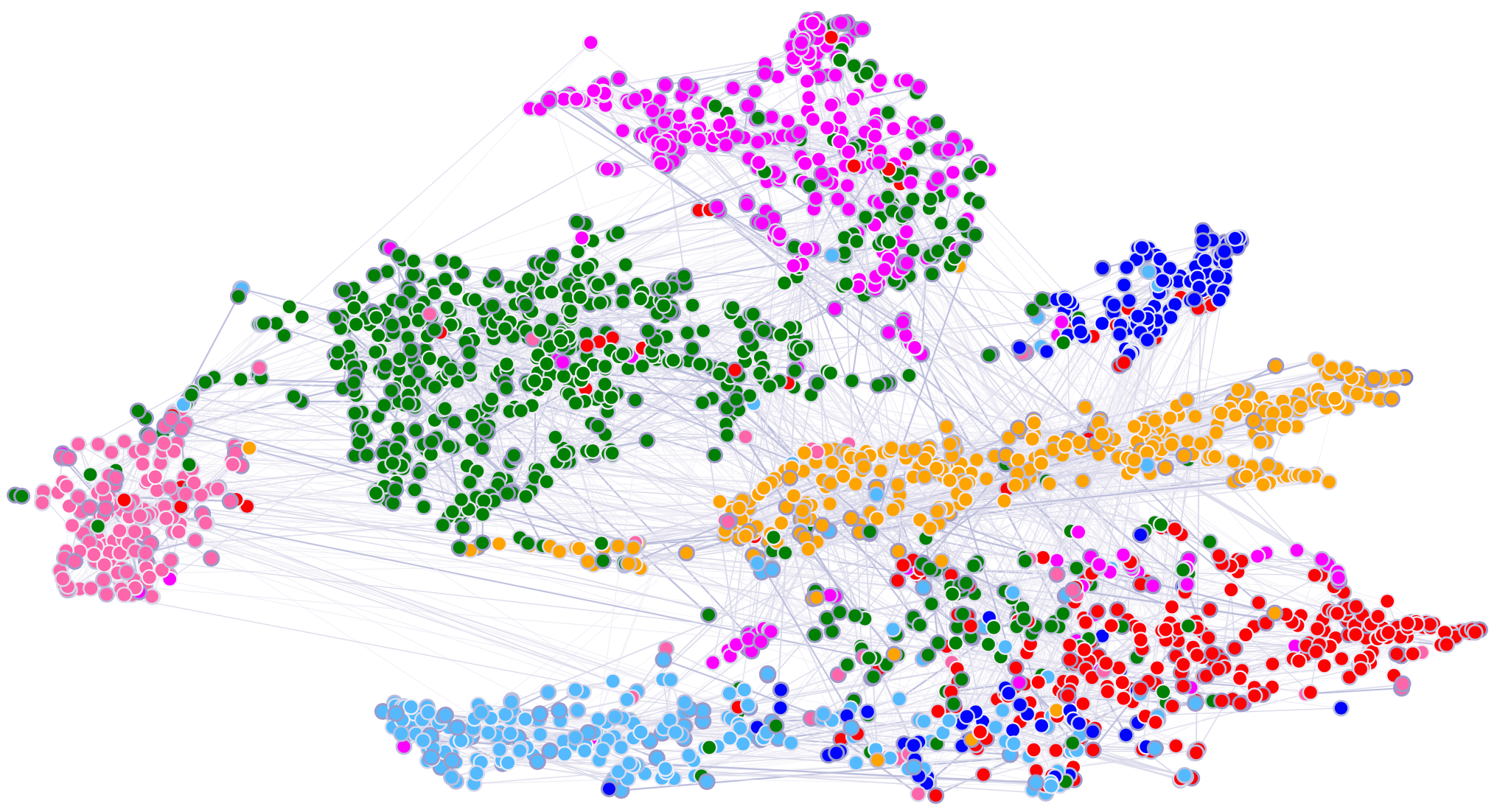

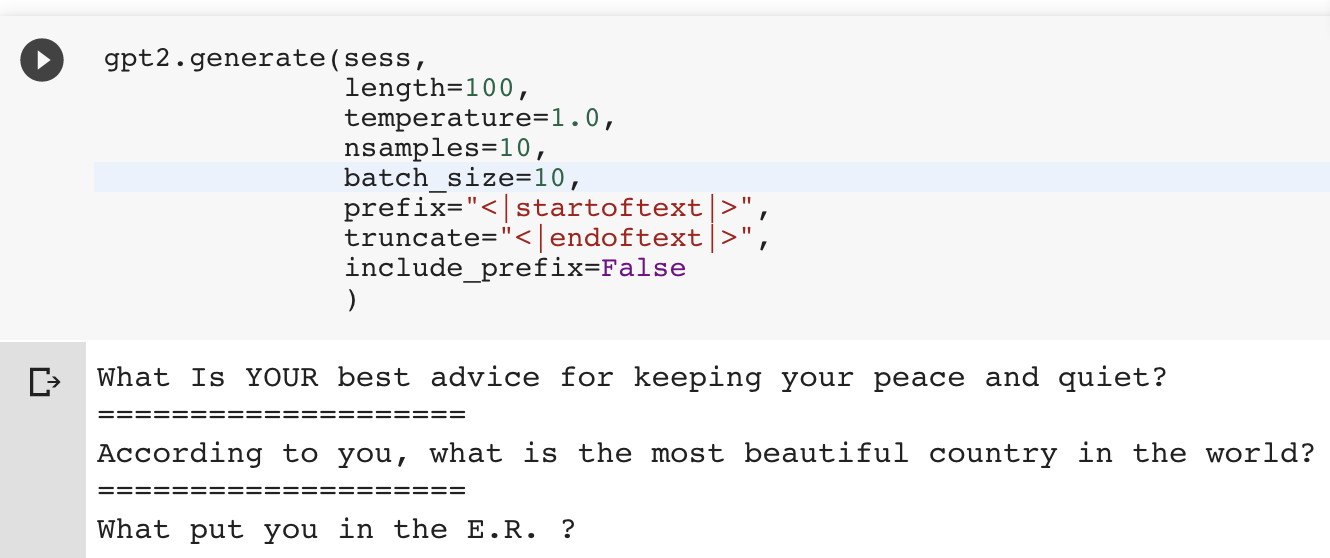

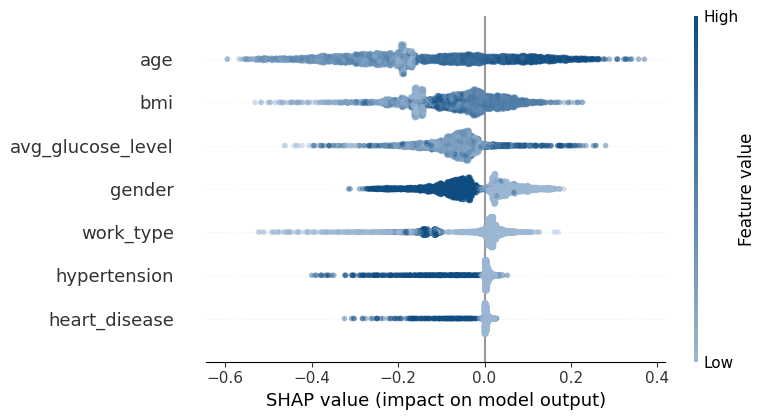

SHAP vs Lime Vs ELI5

- Tools: Python, PyTorch

- To explain the model's predictions, the project uses model interpretability tools such as SHAP (SHapley Additive exPlanations), Lime (Local Interpretable Model-agnostic Explanations), and Eli5 (Explain Like I'm 5). These tools provide insights into how the model makes decisions and highlight the importance of different features in predicting strokes..

Skills

Languages and Databases

Python

Python

HTML5

HTML5

CSS3

CSS3

MySQL

MySQL

PostgreSQL

PostgreSQL

Shell Scripting

Shell Scripting

Libraries

NumPy

NumPy

Pandas

Pandas

OpenCV

OpenCV

scikit-learn

scikit-learn

matplotlib

matplotlib

NLTK

NLTK

Frameworks

Django

Django

Flask

Flask

Bootstrap

Bootstrap

Keras

Keras

TensorFlow

TensorFlow

PyTorch

PyTorch

Other

Git

Git

AWS

AWS

Docker

Docker

Education

Laramie, WY

Degree: PhD in Computer Science

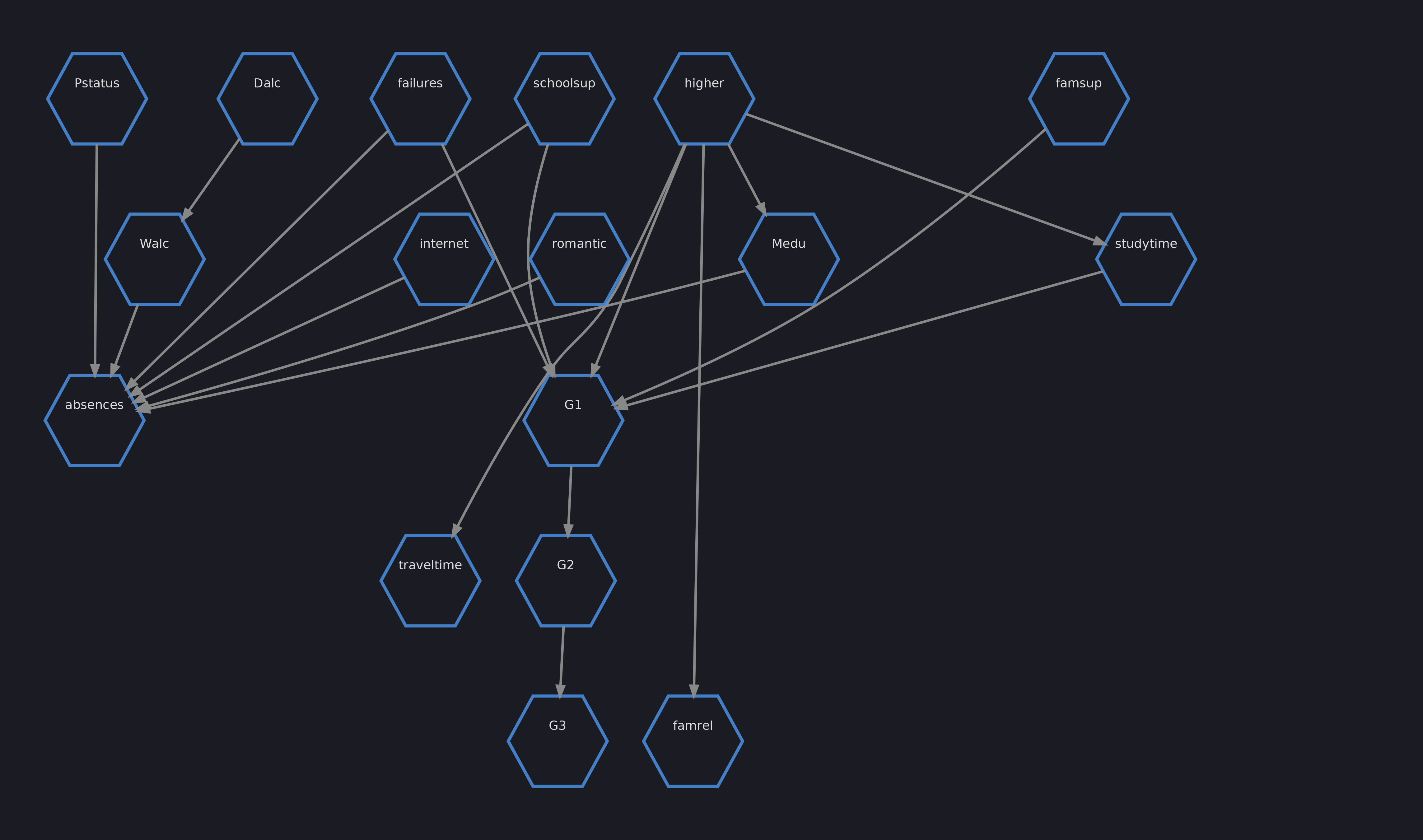

Area of Study: Causal Reasoning for Improving Generalization in Visual Question Answering

- Intro to Artificial Intelligen

- Machine Learning

- High Perform Comput & Paradigm

- Advanced Image Processing

- Neural and Fuzzy Systems

Relevant Courseworks:

Tehran, Iran

Degree: Masters of Information Technology

CGPA: 3.68/4.0

- Artificial Neural Networks

- Neural and Fuzzy Systems

Relevant Courseworks: